Seeing Rising Election Misinformation, Americans Say Social Media Platforms May Bear Responsibility for Political Violence

Justin Hendrix, Ben Lennett / Nov 1, 2024With the 2024 election just days away, Americans express growing concern about election-related misinformation on social media, with 65% believing the problem has worsened since 2020, according to a Tech Policy Press/YouGov survey of 1,089 voters fielded from October 24 to October 25, 2024. The results reveal broad, bipartisan support for social media companies taking a more active role in content moderation, with 71% of respondents favoring platforms prioritizing the prevention of false claims over unrestricted expression and 72% believing political figures should be held to higher standards than regular users due to their outsized influence.

“Heading into the post-election period, clear majorities of Americans on both sides of the aisle want social media companies to do more to protect democracy,” noted Daniel Kreiss, a professor in the Hussman School of Journalism and Media and a principal researcher of the Center for Information, Technology, and Public Life at UNC Chapel Hill, who reviewed a copy of the poll results provided by Tech Policy Press.

Regarding specific interventions, voters show the strongest support for warning labels on potentially false content (56%), though views on more aggressive measures like account suspensions and permanent bans vary significantly by party affiliation. Notably, while Democrats and Republicans may differ on the appropriate extent of content moderation, the Congressional certification of election results emerges as one natural point for platforms to begin to more aggressively moderate election fraud claims and to take action on groups, pages, and accounts that propagate such claims.

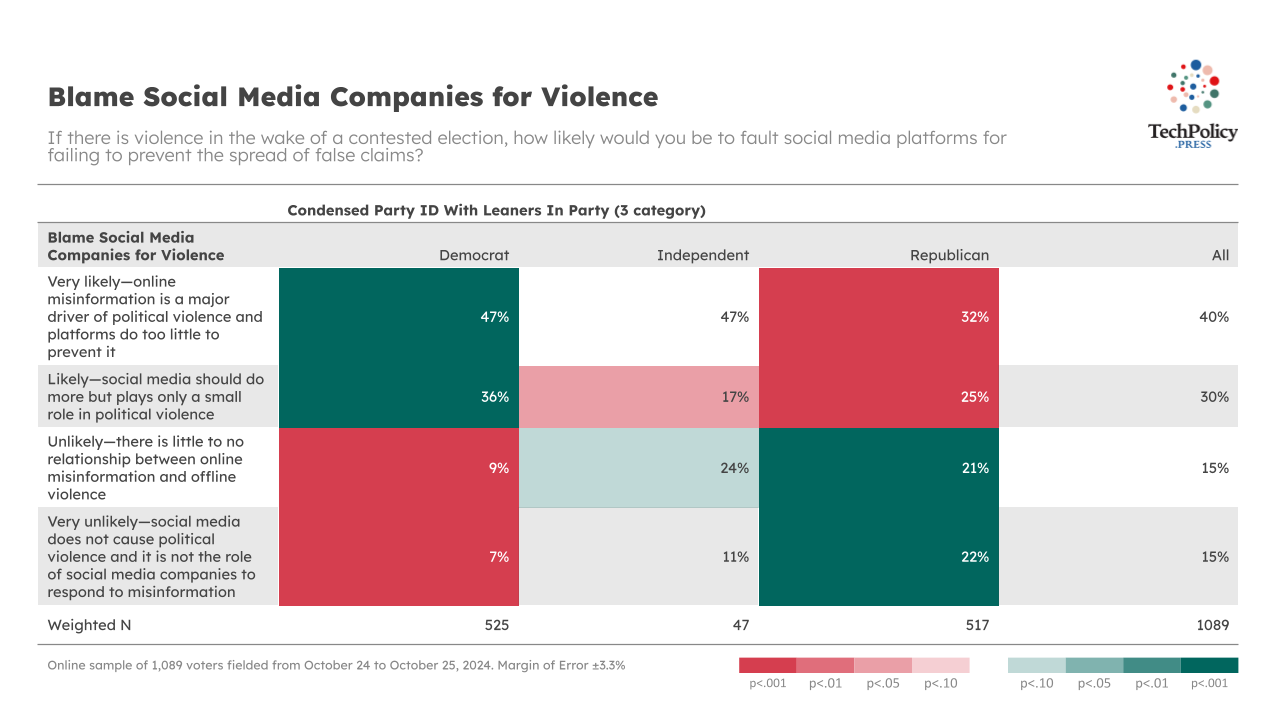

The survey also reveals that 70% of Americans would likely cast some of the blame on social media platforms if political violence follows a contested election, though this sentiment is significantly stronger among Democrats (83%) than Republicans (57%).

“These findings demonstrate that, despite what some of the loudest voices might be saying, most Americans actually do believe social media companies have a civic responsibility to protect democratic processes, including ensuring they are not helping fuel potential political violence,” said Yaёl Eisenstat, a Senior Policy Fellow at NYU Cybersecurity for Democracy.

Below are the results in more detail.

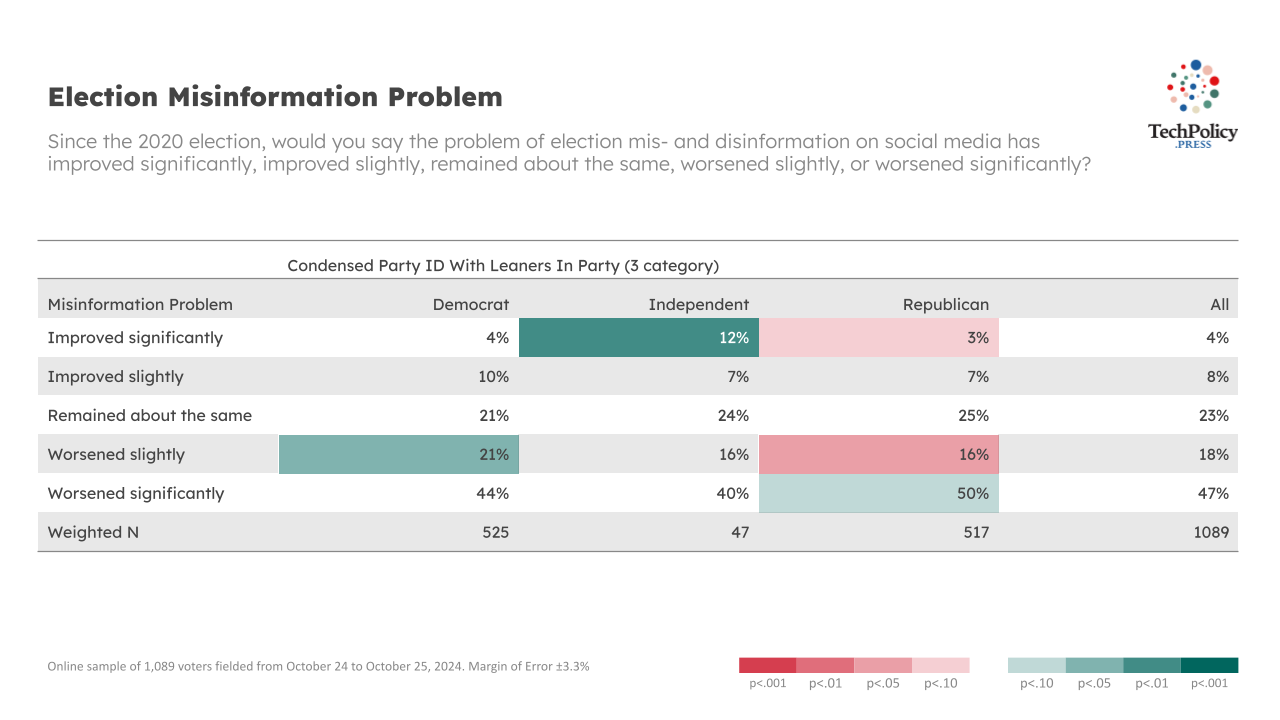

Respondents believe the problem of election misinformation is worse than in 2020

When asked whether the problem of election mis- and disinformation on social media has improved or worsened since the 2020 election, a strong majority of Americans (65% in total) believe the problem has worsened either slightly or significantly, while only 12% believe it has improved.

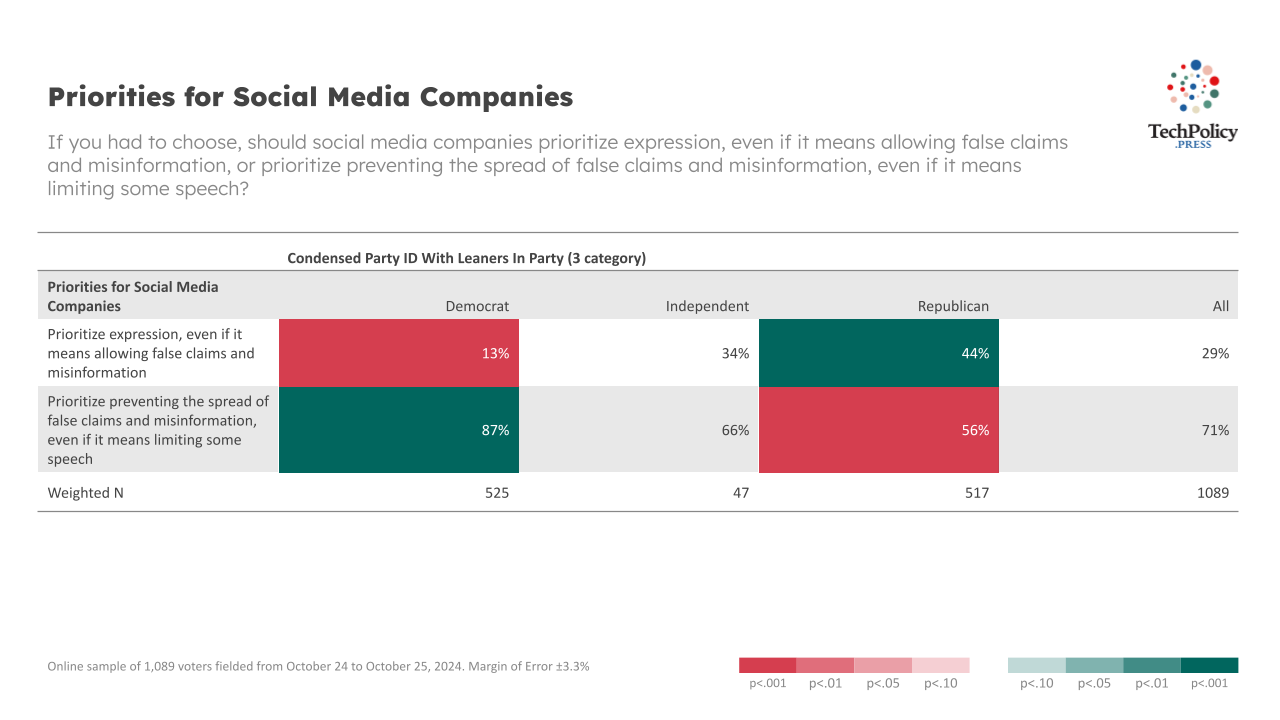

Respondents want platforms to prioritize addressing the spread of false claims

When asked, “If you had to choose, should social media companies prioritize ensuring expression or preventing the spread of false claims and misinformation in the event of a contested election?” a clear majority of voters – 71% – say companies should prioritize preventing false claims and misinformation, even if it means limiting some speech.

29% say companies should prioritize expression, even if it means allowing false claims and misinformation. While all groups favor preventing false claims over unrestricted expression, the strength of this preference varies significantly by party, with Democrats showing the strongest support for content moderation and Republicans being more evenly split on the issue.

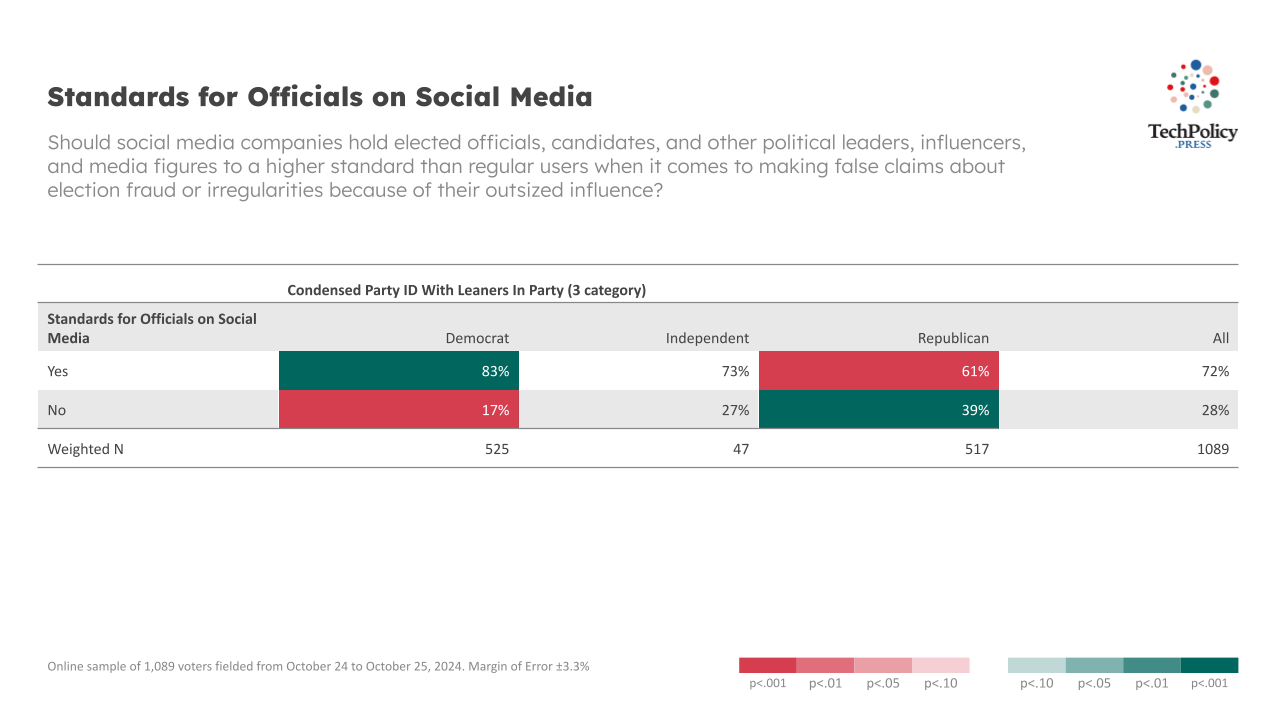

Respondents want social media companies to hold political figures to a higher standard

When asked, “Should social media companies hold elected officials, candidates, and other political leaders, influencers, and media figures to a higher standard than regular users when it comes to making false claims about election fraud or irregularities because of their outsized influence?” 72% say yes, these figures should be held to a higher standard, while 28% say no, they should not be held to a higher standard. Democrats show the strongest support for a higher standard (83% support), while a strong majority of Republicans also support a higher standard (61% support).

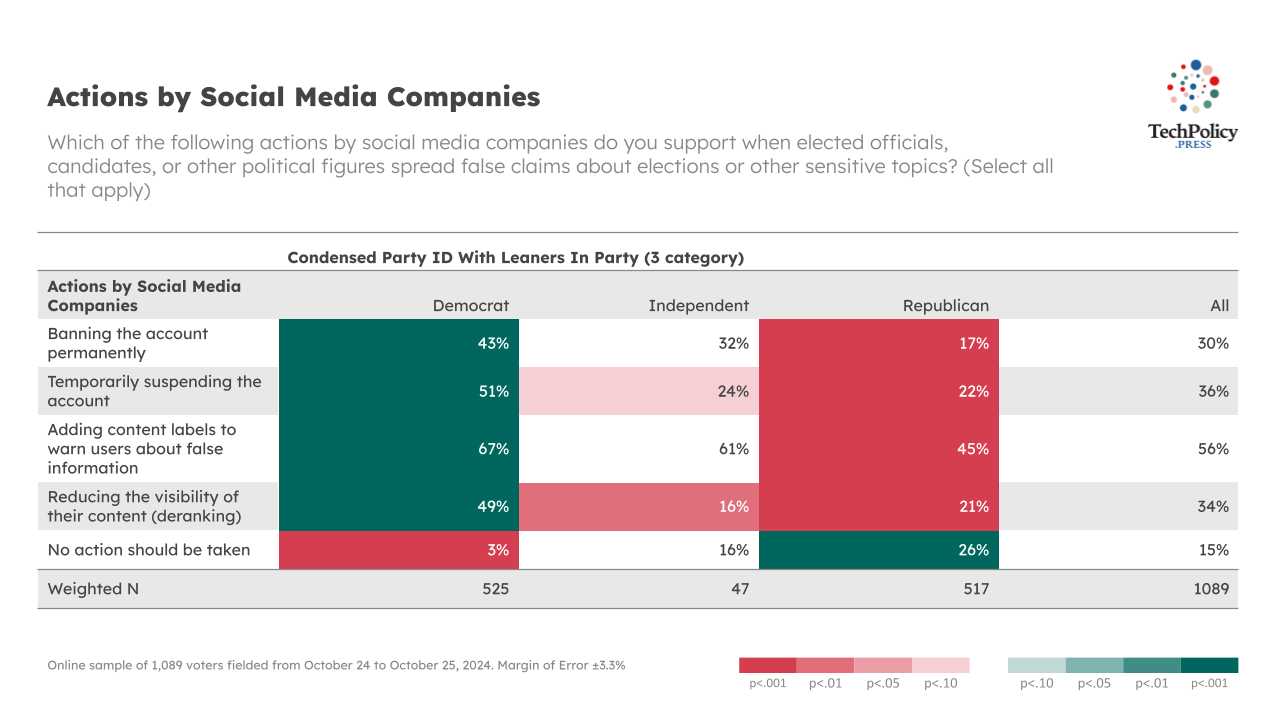

Warning labels are the most favored intervention to address false claims

When asked about support for different types of actions social media companies might take against public figures who spread false claims about elections, 56% support adding content warning labels. 36% support temporary account suspension, 34% support reducing content visibility (deranking), 30% support permanent account bans, and 15% say no action should be taken. Democrats generally show stronger support for all enforcement actions, while Republicans show lower support for interventions overall, with about a quarter saying no action should be taken.

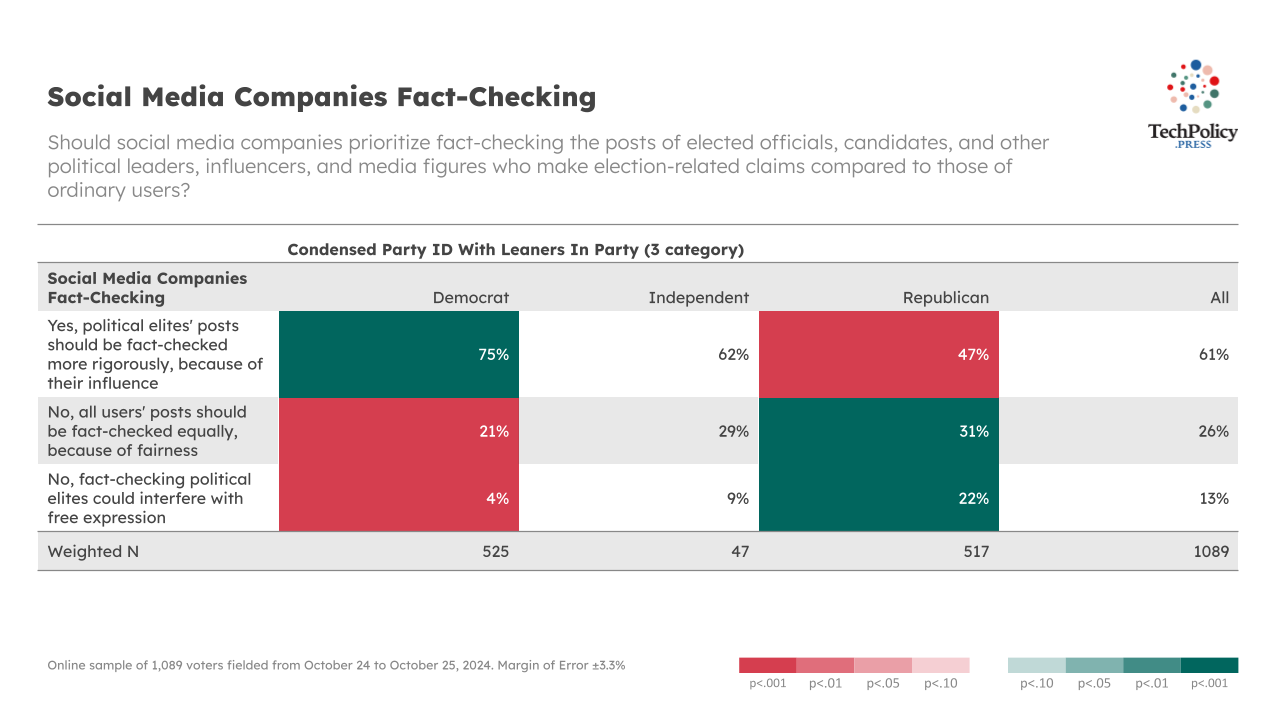

Respondents believe fact-checking political figures should be prioritized over regular users

Asked about whether social media companies should prioritize fact-checking posts from political figures over ordinary users, 61% say yes, political elites should be fact-checked more rigorously due to their influence. 26% say no, all users should be fact-checked equally for fairness. Only 13% say no, fact-checking political elites could interfere with free expression. Democrats show the strongest support (75%), while Republicans show more divided views, though still with plurality support (47%).

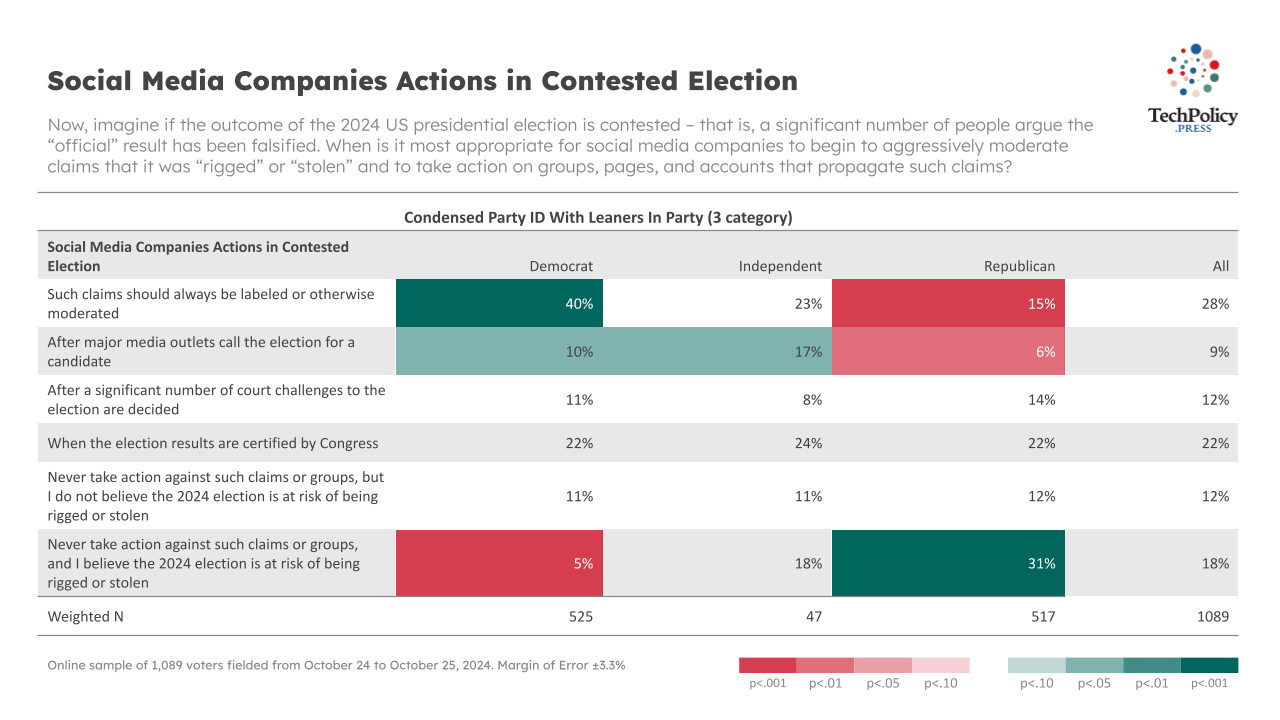

Congressional certification appears to be a natural point by which platforms should more aggressively moderate claims of election fraud

Voters were asked “Now, imagine if the outcome of the 2024 US presidential election is contested – that is, a significant number of people argue the ‘official’ result has been falsified. When is it most appropriate for social media companies to begin to aggressively moderate claims that it was ‘rigged’ or “stolen” and to take action on groups, pages, and accounts that propagate such claims?” In response, 28% say such claims should always be labeled/moderated. 9% say they should be moderated after major media outlets call the election, 12% say after court challenges are decided, and 22% say when Congress certifies the results. 12% say never take action, but they don't believe the 2024 election is at risk, and 18% say never take action and believe the 2024 election is at risk.

Here, there are significant partisan differences, with Democrats most likely to support immediate moderation (40%). Republicans are more likely to oppose any moderation, with 31% believing that the platform should never take against election misinformation and that the election is at risk of being stolen. Congressional certification emerges as a consistently supported point for more aggressive moderation to commence, finding support among 22% of Democrats and 24% of Independents and Republicans.

Respondents believe social media platforms will bear blame for political violence following a contested election

Asked whether social media platforms should bear some blame for any violence following a contested election, 40% say "very likely" to blame platforms, viewing misinformation as a major driver of violence, while 30% say they are "likely" to blame platforms but see social media as playing only a small role. Only 30% are unlikely or very unlikely to blame the platforms. Democrats show a stronger conviction about platforms' responsibility (83% responding very likely or likely) compared to Republicans (57% responding very likely or likely).

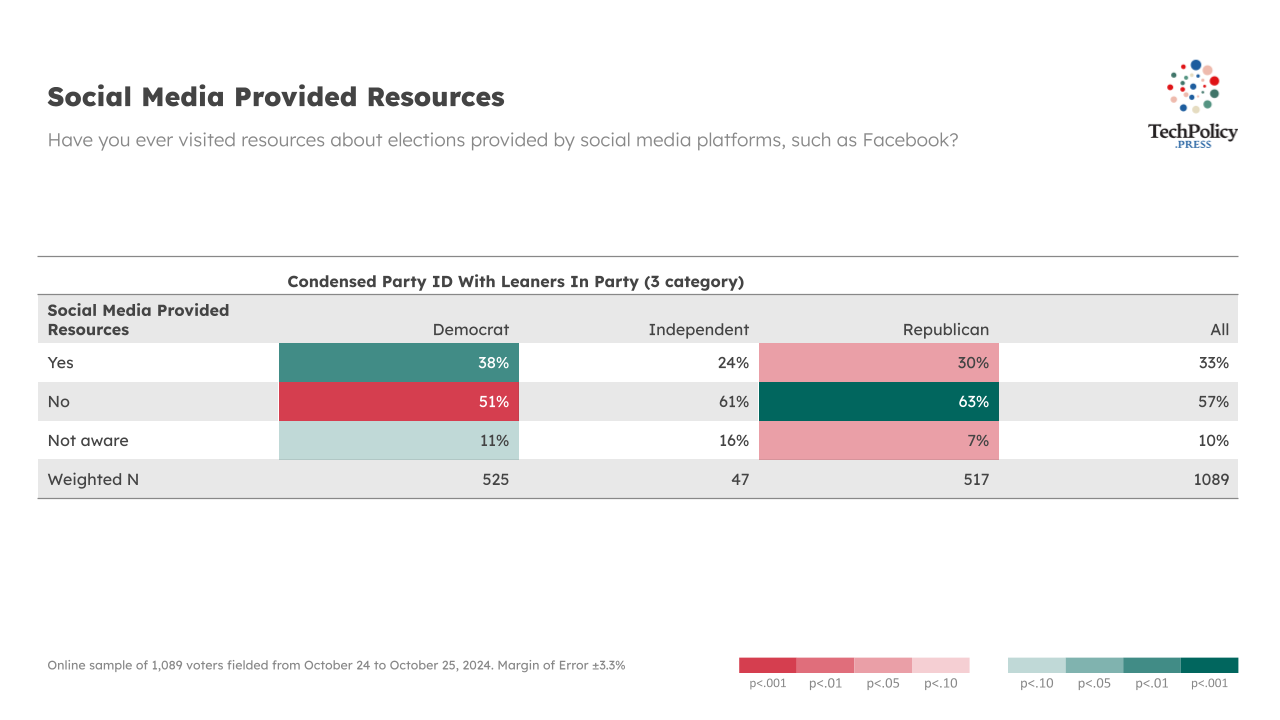

Most users say they have not visited election resources provided by social media platforms

Asked whether they had visited election-related resources provided by social media platforms like Facebook, 57% said no, they have not, while only 33% said yes, they have visited these resources, and 10% are not aware of such resources. This result marks a general decline in both awareness and claimed usage of social media election resources across all party affiliations from a June poll that posed the same question.

A possible consensus around election misinformation?

These results suggest the potential for public consensus that social media platforms should not be passive observers in electoral disputes, with majorities supporting proactive content moderation and accepting some potential limitations on expression to prevent the spread of false claims. Of course, how to apply such limitations and in what circumstances remains divisive. If there is substantial political violence following the 2024 election, public support for platforms to take a more aggressive posture on protecting election integrity may increase.

Authors